There’s a long-running joke on the Internet that you can tell if someone’s using an Android phone by the bad quality of their selfies. While the memes are definitely exaggerating quite a bit, there’s, unfortunately, a bit of truth behind them. Android phones have usually been ahead of iPhones in terms of camera performance, at least on paper. OEMs like LG and Samsung tend to use cameras with more pixels and larger apertures than competing iPhones. Specs aren’t everything, though. Part of why Apple can compete in the camera game is because of iOS’ software. All OEMs, from Apple to ZTE, use some software magic to make photos and videos look better. The companies use algorithms to reduce noise, tweak color saturation and contrast, and even brighten up dark scenes, all to make the end results more pleasing. This is, historically, where Android OEMs have fallen short of Apple.

Software Developments

More recently, though, things have begun to change. The biggest example of this change is probably Google and the Pixel lineup. In terms of hardware, the Pixel cameras really can’t compare to other flagships. Software is where Google makes up the difference, though. With features like Portrait Mode, Night Sight, HDR+, and Super Res Zoom, the Pixels have earned a reputation for having some of the best smartphone cameras on the market.

Other Android OEMs are following Google’s lead. Samsung, OPPO, Xiaomi, Vivo, OnePlus, etc, have all introduced their own versions of the Pixel’s more popular camera features, and they even have some unique features of their own. With buzzwords like “AI” and “machine learning,” Android OEMs are trying to convince you that their software is the software you want to enhance your photos and videos. We’ve gotten to a point where Android phones can genuinely compete against iPhones in all aspects of camera performance.

The Problem

Unfortunately, there’s still one major exception: third-party apps. Your super-duper 50-camera flagship might take amazing photos with the built-in camera app, but switch to Instagram, Snapchat, or even a third-party camera app, and it’s basically a guarantee that what you capture won’t look nearly as good. As if that weren’t enough, you also lose out on all the cool camera features and modes. This is because, unlike iOS, Android doesn’t really have a unified camera framework. Sure, the basic features are there. A third-party app can still take photos and videos, and use the flash. But what happens if your phone has a secondary sensor for wide-angle or telephoto? It’s possible that developers will be able to access that second sensor, but the method they use will have to be specific to your device.

Say you have an LG V40 (I know, I know, just imagine you do). The V40 has three sensors: standard, telephoto, and ultrawide. The built-in camera app has no problem switching between all these different sensors. But forget about using the ultrawide sensor on Instagram. Now, Instagram could take a look at how LG’s camera app uses the different sensors and develop a way for users to take wide-angle or zoom shots. But that would probably only work on the LG V40. Even though the Galaxy S10 has the same three sensor modes (standard, telephoto, and ultrawide), the Instagram team would have to develop another method for Samsung.

Now add Huawei, Vivo, OnePlus, Xiaomi, OPPO, Nokia, ZTE, HTC, and whatever other brand you can possibly think of to the mix. As you can imagine, trying to develop a method for accessing just the potential extra sensors for each phone from each brand would get incredibly tedious. And then you have to maintain compatibility with all current and new phones.

These limitations apply to camera features as well. Things like Portrait Mode, Night Sight, and HDR+ either need specific per-device methods to use, or are completely inaccessible to third-party apps.

Obviously, for an app like Instagram, this isn’t too big a deal. It’s not their focus, and you could always use your phone’s camera app to take the shot first. But what about dedicated camera apps?

The Effects

Open the Google Play Store and search for “camera.” You’re going to find hundreds of results. Even with the improvements in first-party camera software, third-party camera apps are still very popular. Some aim to provide more technical features like manual exposure and focus (features that your phone’s camera app may not have). Others aim to provide a consistent UX across your devices.

Especially for the latter reason, Android’s camera fragmentation can make it incredibly difficult to develop and maintain a widely-compatible camera app. If you’re looking to provide extra features, how are you going to include all the potential features of all the potential first-party apps? If you’re looking for a consistent experience, how can you realistically guarantee that accessing the wide-angle sensor on every device that has it will work?

The answer is: you can’t. You can try to support as many features on as many devices as possible, but in the end, it’s going to be a lot of work for relatively little reward. It isn’t hard to imagine that at least a few developers have simply given up on making a fully-featured camera app for Android. In fact, several have.

The Casualties

Go do a search for camera apps on the Play Store. You’ll notice a few things. One, there are a lot of options. Two, most of them do pretty similar things (apply filters after-the-fact and such). Three, the more advanced options probably aren’t updated that frequently. You may even find results on Google that are no longer on the store.

Recently, we’ve gotten a pretty major example of someone calling it quits. Moment decided to cease development on its Pro Camera app for Android.

.@moment is dropping its Pro Camera app for Android and focusing on iOS development. pic.twitter.com/dx9e1dv17B

— Justin Duino (@jaduino) February 28, 2020

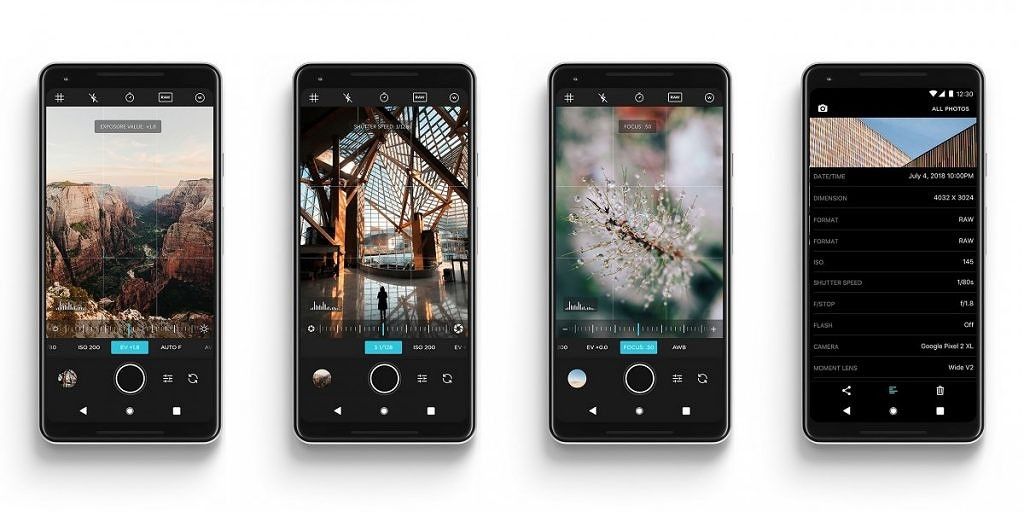

Moment’s Pro Camera app aimed to bring advanced photo and video features to Android. These are just a few of those features:

- RGB histograms

- Split focus

- Manual control over exposure, ISO, shutter speed, white balance

- Focus peaking

- RAW capture

- Dynamic framerate and resolution changes

Moment Pro Camera screenshots. Via: 9to5Google

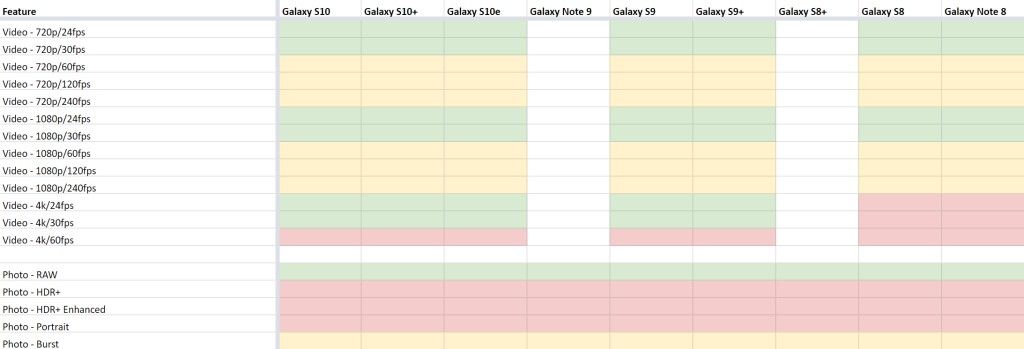

The problem is that a lot of these features simply don’t work on a lot of devices. Looking at Moment’s feature compatibility list is like looking at a picture of a dance floor. Even within the same product line, feature support is incredibly fragmented. After two years of development, Moment no longer has the capacity to continue development on its app.

Green = supported by Moment Pro Camera. Yellow = supported by device but not my Moment Pro Camera. Red = not supported by device. Image source: Moment. Retrieved via: 9to5Google.

The Solution?

It’s been nearly 12 years since Android was first released, but we may finally have a solution to the camera fragmentation.

This solution comes straight from Google, although it isn’t built directly into Android. Instead, it’s a Jetpack support library. If you’re familiar with developing Android apps, you’ve probably run into the AppCompat and AndroidX support library suites. These libraries from Google aim to make it easier for developers to maintain backwards compatibility with older Android versions, while still being able to introduce new features and styles.

A newer addition to Jetpack (sort of), is the CameraX library. Similar to other Jetpack libraries, CameraX’s goal is to make camera development easier. In its most basic form, CameraX wraps Android’s Camera2, an API that allows apps to probe the camera features on a device, provided the OEM exposes those camera features to the API. Users can check what camera features are exposed to the Camera2 API using the Camera2 API Probe application and then compare that to the features available in the stock camera application.

Camera2 API Probe (Free, Google Play) →

The benefit of using CameraX as a wrapper for the Camera2 API is that, internally, it resolves any device-specific compatibility issues that may arise. This alone will be useful for camera app developers since it can reduce boilerplate code and time spent researching camera problems. That’s not all that CameraX can do, though.

While that first part is mostly only interesting to developers, there’s another part that applies to both developers and end users: Vendor Extensions. This is Google’s answer to the camera feature fragmentation on Android. Device manufacturers can opt to ship extension libraries with their phones that allow CameraX (and developers and users) to leverage native camera features. For example, say you really like Samsung’s Portrait Mode effect, but you don’t like the camera app itself. If Samsung decides to implement a CameraX Portrait Mode extension in its phones, any third-party app using CameraX will be able to use Samsung’s Portrait Mode. Obviously, this isn’t just confined to that one feature. Manufacturers can theoretically open up any of their camera features to apps using CameraX.

Unfortunately, there is a caveat, which I mentioned earlier: this isn’t a requirement for manufacturers. Google says they’ll support Extensions on all new and upcoming Pixel devices, starting with the Pixel 4. OPPO says they’ve opened up their Beauty and HDR modes. Other OEMs could choose to make only their Night Modes available to CameraX, or they could choose to not implement any extensions at all. It’s completely up to the manufacturer which device supports which CameraX extensions (if any). Google used to maintain a list of devices that support Vendor Extensions and what camera features they opened up, but they have not updated the list for the past several months. We reached out to Google a few weeks ago asking them if they could provide an updated list, but the company has not yet responded with a list. For what it’s worth, a recent Google blog post states that devices from Samsung, LG, OPPO, Xiaomi, and Motorola (on Android 10) provide some extension functions, but the blog post does not specify exactly which devices are supported or what functions they provide.

If enough manufacturers do decide to implement extensions, Android’s third-party camera scene will be a whole lot brighter. Developers won’t have to waste time reimplementing a camera feature for every device they possibly can since CameraX’s framework will take care of it. There will be less feature fragmentation since similar features across devices will be accessible through a common interface. And there are many more possibilities.

Of course, this all depends on Google’s ability to convince manufacturers to implement CameraX Vendor Extension libraries going forward. Unless CameraX is widely implemented, it will just add to the current fragmentation. Personally, though, I’m hopeful. Google can be very convincing when it wants to be, and it seems like a lot of work is going into CameraX. It’s exciting to see a possible solution to Android’s camera issues on the horizon, and I look forward to seeing how CameraX will improve and expand over time.

What do you think about CameraX? Will Google succeed in making a unified camera experience for Android?

The post Google’s CameraX Android API will let third-party apps use the best features of the stock camera appeared first on xda-developers.

from xda-developers https://ift.tt/2WSvH3y

via IFTTT

No comments:

Post a Comment