ARCore is Google’s SDK for creating augmented reality experiences on Android and iOS. On Android devices, it’s delivered as part of the Google Play Services for AR app. Late last year, Google previewed the ARCore Depth API which improves the immersion for devices with a single camera. Now, this API is ready for a public launch for developers on Android and Unity, according to a blog post from Google.

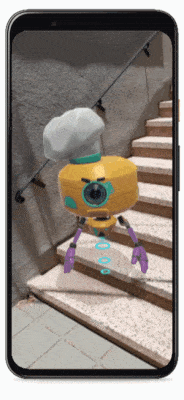

The ARCore Depth API uses Google’s depth-from-motion algorithms to generate a depth map from a single RGB camera. It accomplishes this by taking multiple images from different angles and comparing them as the user moves the camera around. One of the key abilities of the Depth API is occlusion which makes it possible to accurately place digital objects behind real-world objects. Beyond occlusion, the Depth API also enables realistic physics, interaction with real-world surfaces, environmental traversal, and more. As you can see in the GIFs embedded below, these features make the augmented reality experience more realistic. The game Five Nights at Freddy’s AR: Special Delivery uses this feature, and Snap Inc. has used the API for its Dancing Hotdog and new Undersea World Snapchat Lenses. Starting today, the Depth API will be generally available for developers in ARCore 1.18 for Android and Unity.

Snapchat Lens Creators can download a Depth API template to create their own depth-based experiences for Android devices. TeamViewer Pilot, an app for remote assistance, is using the Depth API to enable augmented reality annotations during video calls. Google says we will be able to see even more depth-enabled AR experiences later this year that put surface interactions and environmental traversal to use. For example, a game called SKATRIX will turn your home into a digital skate park while another game called SPLASHAAR will pit AR snails in a race across your room. Developers can build with these concepts through the open-sourced project on GitHub.

Google also points out that time-of-flight (ToF) sensors, while not required, can improve the quality of the experience by reducing scanning time and improving plane detection. Samsung, for example, will be updating its Quick Measure app to use the ARCore Depth API on the Galaxy Note 10+ and Galaxy S20 Ultra. However, Google notes this feature will generally work on the hundreds of millions of Android devices that support Google Play Services for AR since it only requires a single RGB camera.

The post Google’s ARCore Depth API is now available for developers to make realistic AR experiences appeared first on xda-developers.

from xda-developers https://ift.tt/31bjjh8

via IFTTT

No comments:

Post a Comment